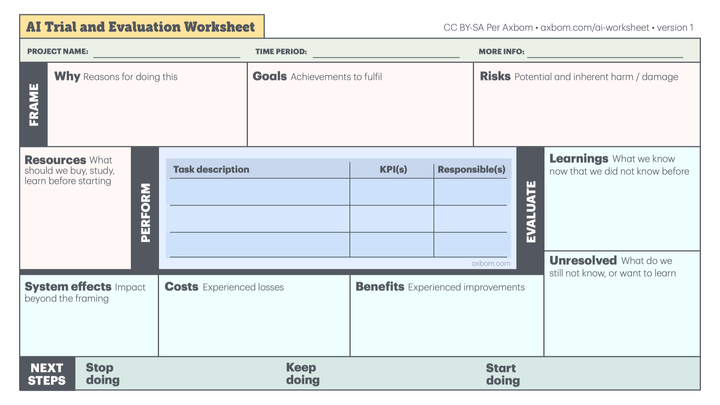

AI Trial and Evaluation Worksheet

Many people are telling me how their companies are running tests of AI tools. This in itself is not strange at all. It's probably difficult as a leader today not to feel stressed by the manufacturers of AI promising revolutionary abilities that will radically change all of business